<article>

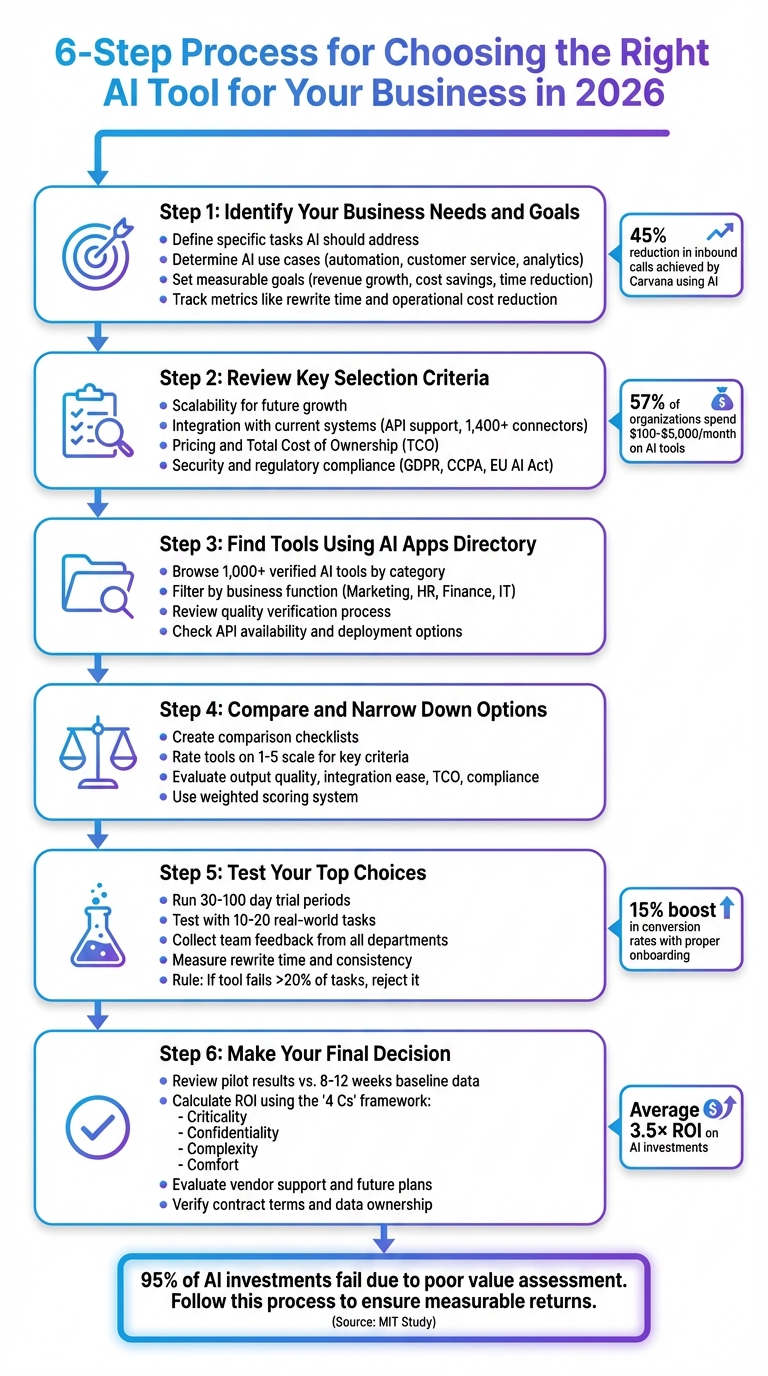

6-Step Process for Choosing the Right AI Tool for Your Business in 2026

Enhance Business Efficiency: Selecting the Right AI Tools for Your Needs

Choosing the Right AI Tool in 2026: Key Takeaways

AI tools are now essential for businesses, but picking the right one can be overwhelming. Here’s a quick guide to ensure you make the best choice:

Start with These Steps:

- Define Your Needs: Identify specific tasks and goals AI should address (e.g., automating reports, improving customer service).

- Set Clear Metrics: Use measurable goals like cost savings, time reduction, or revenue growth to evaluate success.

- Evaluate Key Criteria: Focus on scalability, system integration, pricing, and compliance with data security laws.

- Test Tools: Run pilot tests with real tasks to assess performance, ease of use, and ROI.

- Review Vendor Support: Ensure the provider offers strong customer support, updates, and flexible contracts.

Common Use Cases:

- Automating repetitive workflows (e.g., HR, IT, supply chain).

- Enhancing productivity (e.g., content creation, analytics).

- Improving customer experiences (e.g., AI chatbots, predictive insights).

Tools to Consider:

Use directories like AI Apps to compare and shortlist tools by categories and features. Look for verified options and test them against your specific requirements.

Final Tip:

Always match the tool to your business needs, not trends. Focus on measurable outcomes to ensure your investment delivers real value. </article>

Step 1: Identify Your Business Needs and Goals

Before diving into AI tools, take a step back and define what you need them to do. Start by identifying the tasks your team handles regularly - whether it’s writing, coding, managing meetings, providing customer support, or simplifying document workflows. The key is to align these tasks with clear, tangible outcomes rather than simply following the latest tech trends.

"To create successful generative AI or traditional AI solutions, begin by clearly identifying the specific measurable business goals or needs that you want to address." – Google Cloud

When choosing tools, think about the level of risk involved. For low-risk tasks, like drafting internal documents, opt for cost-effective and flexible models. For high-risk tasks, such as legal drafting or financial analysis, prioritize more robust systems with oversight. This "risk ladder" approach helps you avoid unnecessary spending or taking shortcuts where precision is critical. From here, focus on identifying AI use cases that address challenges specific to your industry.

Determine Your AI Use Cases

Once you’ve outlined your business needs, pinpoint AI applications that directly tackle your core tasks. By 2026, AI tools have become especially useful in areas like process automation, customer service, analytics, content creation, and productivity improvements.

For example, in 2025, British Columbia Investment Management Corporation (BCI) adopted Microsoft 365 Copilot, saving 2,300 person-hours through automation and cutting the time spent on internal audit reports by 30%. Between 2023 and 2025, Carvana reduced inbound calls per sale by over 45% by using an AI agent named "Sebastian" to analyze customer interactions.

Similarly, in 2025, Moglix, an Indian digital supply chain platform, used Vertex AI for vendor discovery, boosting their sourcing team’s efficiency fourfold - from INR 12 crore to INR 50 crore per quarter. Spanish technology firm atmira also achieved impressive results, improving debt recovery rates by 30–40% and slashing operational costs by 54% with an AI platform that handled 114 million monthly requests.

Tailor your AI use cases to the specific challenges in your industry. For instance:

- Customer support teams often face overflowing ticket queues and repetitive questions.

- IT departments may deal with too many tools and slow root cause analysis.

- HR teams can struggle with manual application processing.

- Supply chain teams frequently encounter inaccurate demand forecasts and limited transparency.

Set Measurable Goals

Goals like "improving efficiency" are too vague to evaluate an AI tool’s success. Instead, set clear, measurable objectives tied to outcomes like revenue growth, cost savings, or a competitive edge. Metrics could include financial gains, faster time-to-market, or improved customer experience, such as higher CSAT scores.

In October 2025, AdVon Commerce used Gemini and Veo to process a 93,673-product catalog for major retailers in under a month - a task that previously took a year. This effort led to a 30% boost in top search rank placements and a $17 million revenue increase within just 60 days. Similarly, Toyota saved over 10,000 man-hours annually by deploying an AI platform that empowered factory workers to create machine learning models.

It’s important to define your success criteria early. For example, track how much human effort is required to refine AI-generated content by measuring "rewrite time". If an AI model fails more than 20% of your evaluation tasks, the supposedly "cheap" option might end up costing more due to additional quality checks and editing. Keep an eye on metrics like reduced operational costs, fewer agent working hours, or better first-contact resolution rates.

Step 2: Review Key Selection Criteria

After defining your business needs and setting measurable goals, the next step is to focus on the technical and operational factors that determine whether an AI tool aligns with your organization. It’s not about choosing the "smartest" model - it’s about selecting one that fits your specific requirements, risk tolerance, and budget.

"Choosing an AI model in 2026 is not about 'the smartest model' – it is about picking the right model for the job, the risk level, and the budget." – AI Tools Business

Here’s how to evaluate tools that can genuinely add value rather than just sound impressive.

Scalability for Future Growth

Your AI tool should handle your current workload while being prepared for future growth. As your business expands, you’ll likely process more data, onboard additional users, and explore new applications. For example, AI token limits have grown significantly - from 4,000 tokens in GPT-3.5 to 2 million in Gemini 2.0 Pro. This means you’ll want a solution that scales without sacrificing performance.

Consider adopting a tiered model strategy to manage costs as your usage grows. For high-volume, low-risk tasks like drafting internal emails or formatting documents, use faster, lower-cost models. Reserve advanced, more expensive models for complex tasks such as financial analysis or strategic planning. Also, review the vendor’s product roadmap to ensure future updates align with your long-term goals. Organizations already skilled in AI implementation often see better financial outcomes.

To evaluate performance, don’t rely on a single, ideal test. Instead, run 10–20 real-world tasks from your workflow across multiple trials. This approach gives a clearer picture of how the tool will perform under actual conditions.

Finally, assess how well the tool integrates with your existing systems to ensure a smooth adoption process.

Integration with Current Systems

For any AI tool to succeed, it must integrate seamlessly with your existing software stack. Check if the vendor offers robust API integrations and SDK support that allow the tool to work within your systems without causing major disruptions. For example, Azure Logic Apps offers over 1,400 connectors to simplify enterprise integration.

Look for tools with modular designs that can easily fit into your workflows. Avoid rigid, one-size-fits-all solutions that require complicated workarounds. Ideally, the tool should support Retrieval-Augmented Generation (RAG), enabling it to pull accurate data from platforms like Slack, Jira, or Salesforce.

Before implementation, audit your internal data quality. AI tools rely on clean, well-organized data to function effectively. Identify bottlenecks such as manual data entry, compliance delays, or repetitive approval steps. This helps ensure the tool addresses real challenges rather than providing superficial features.

In the legal field, for instance, 74% of professionals already use AI, and 90% plan to expand its use in the next year. As Zuhair Saadat, former Contracts Manager at Signifyd, remarked:

"I feel like if we're not going to use AI within Legal at some point, we're going to be behind our legal peers."

Once integration capabilities are clear, you can move on to evaluating costs and overall value.

Pricing and Total Cost

After confirming the technical fit, it’s time to examine the financial impact. Beyond subscription fees, the total cost of ownership (TCO) includes integration expenses, implementation time, data preparation, and ongoing maintenance. For instance, data preparation alone - like cleaning and annotating datasets - can account for 15–25% of a project’s total cost. Cleaning a dataset with 100,000 samples might take 80 to 160 hours.

Off-the-shelf AI tools typically cost between $100 and $5,000 per month, with 57% of organizations reporting spending within this range. Custom solutions, however, can be far more expensive. Developing a custom finance chatbot, for example, might cost $20,000 to $80,000, while pre-built chatbot platforms usually range from $99 to $1,500 per month. Additionally, hiring specialized talent like MLOps engineers or data scientists may be one of the largest expenses, with U.S. salaries ranging from $90,000 to $200,000 annually.

Despite these costs, AI investments often deliver strong returns. On average, businesses see a 3.5× return on investment, with 5% of companies reporting returns as high as 8×. To maximize ROI, break complex tasks into smaller, manageable steps that can be handled by less expensive models. Also, keep an eye on how costs evolve as your usage scales. A model that starts out affordable might become too costly as token usage increases.

| Business Size | AI Cost Per Year (Average) |

|---|---|

| Startup / Micro-Enterprise (1-10 employees) | $50 – $500 |

| Small Business (11-50 employees) | $501 – $2,500 |

| Mid-Sized Business (51-250 employees) | $50 – $5,000 |

| Enterprise (501+ employees) | $50 – $25,000 |

Security and Regulatory Compliance

Data protection and compliance are non-negotiable. In 2026, 92% of AI vendors claim broad data usage rights - far higher than the 63% average for typical software. Yet, only 17% explicitly commit to following all applicable laws in their contracts. This makes it critical to carefully review vendor agreements and negotiate intellectual property rights. Ensure ownership of input data, generated outputs, and models trained on your data is clearly defined.

Complying with standards like GDPR, CCPA, or the EU AI Act, along with ongoing monitoring and security updates, can add 15–25% to overall costs. Security gaps are a major risk: 75% of organizations fail to scale AI effectively, often due to compliance and security shortcomings.

When evaluating tools, prioritize those offering unified security and compliance frameworks, especially if you plan to deploy AI across multiple departments. For sensitive tasks - like legal drafting, financial analysis, or handling customer data - choose solutions that include oversight mechanisms and require human review for high-risk outputs.

Step 3: Find Tools Using AI Apps Directory

After establishing your criteria and evaluation methods, it’s time to track down tools that align with your business needs. With so many AI vendors out there, it’s easy to feel overwhelmed - especially when marketing promises don’t always match real-world capabilities. That’s where AI Apps comes in. This platform offers a curated directory of over 1,000 AI tools, neatly organized by business function and verified through hands-on testing.

The directory simplifies your search by categorizing tools into areas like Marketing, HR, Finance, and IT. This approach ensures you’re comparing apples to apples - for example, a writing assistant won’t get lumped in with an automation platform. Each listing includes details like API availability, compatibility with systems like Salesforce and Microsoft 365, and deployment options (cloud, on-premise, or hybrid). Essentially, it bridges your earlier criteria with practical, vetted options tailored to your needs.

How to Navigate AI Apps

Instead of casting a wide net with generic searches like "AI tools", focus on filtering solutions based on your specific workflows. The directory allows you to browse categories and then drill down into subcategories such as talent acquisition, legal operations, or cyber defense.

To make things even easier, AI Apps offers curated views like "AI Tools by Team", which highlight solutions tailored for Sales, Ops, and Support teams. You can also filter tools by model tiers - for instance, opting for "Fast" models for quick, low-risk tasks or "Strong" models for critical, high-stakes analyses. This lets you weigh cost against accuracy and pick what suits your priorities.

The directory spans 9 to 20+ business categories and is trusted by over 500 Fortune 500 companies to find vetted automation solutions. On top of that, enterprise platforms like Azure Logic Apps provide more than 1,400 connectors to integrate AI tools seamlessly with your existing software.

Understanding the Quality Verification Process

Once you’ve shortlisted tools, dive into their quality through AI Apps’ thorough verification process. This multi-step system ensures that each tool listed meets rigorous standards. Reviewers go beyond vendor claims by testing tools in real scenarios - building campaigns, generating drafts, and evaluating actual results. They assess critical factors like output accuracy, bias, adherence to brand voice, and how much human editing is needed.

The process also examines vendor reliability, data security, and compliance with regulations like GDPR and the EU AI Act. Tools are audited for data retention policies and other privacy standards. To maintain accuracy, claims are double-checked by a second reviewer, and tools are re-tested following significant updates.

"AI can multiply output – but only if you pick the right tools, add guardrails, and stay compliant." – AI Tools Business

This rigorous approach helps you avoid common pitfalls, like the 45% of AI projects that fail due to poor vendor support and responsiveness. By leveraging the directory’s filters and verified listings, you can quickly zero in on tools that meet both your technical and compliance needs, setting you up for success in the next comparison phase.

sbb-itb-212c9ea

Step 4: Compare and Narrow Down Your Options

Now that you've established your criteria and shortlisted tools using AI apps, it's time to compare their performance under the same conditions. While vendor websites often showcase impressive features, the real test lies in how these tools perform when tasked with identical challenges. This approach lets you see which tool delivers practical, efficient results.

Make sure to compare tools within the same category. For instance, if you're evaluating AI writing tools, have them work on the same brief. Similarly, when assessing analytics platforms, provide them with identical datasets. This ensures a fair evaluation and helps you measure quality on an even playing field.

Create Comparison Checklists

Once you've narrowed down your list, use a detailed checklist to guide your evaluation. Build this checklist based on the key criteria you identified earlier. Start with critical factors like data privacy and compliance. For example, directly ask vendors, "Do you train your models on my data?" The only acceptable answer for enterprise use is "No".

Next, assess the technical capabilities. Does the tool support features like Retrieval-Augmented Generation (RAG), allowing it to use your internal documents as a knowledge base? Also, check for seamless integration with your existing systems - this can save significant time and effort.

"Don't just evaluate the upfront cost of the AI tool. Consider ongoing expenses, including maintenance, training, support, and any additional credits you might need - these can really rack up." – Kit Cox, Founder and CTO, Enate

Factor in long-term costs, such as training, support, and usage fees, when analyzing the total cost of ownership. Additionally, evaluate the user experience by noting how many steps or clicks it takes to achieve a result. The best tools should feel almost effortless, reducing mental strain rather than adding unnecessary friction. A tool that can handle 80% of your primary use case while adhering to privacy standards is a strong contender.

Sample Comparison Table

A weighted scoring system can help you objectively evaluate your options. Rate each tool on a 1–5 scale based on your critical criteria, then visualize the trade-offs in a table like the one below:

| Tool Name | Output Quality (1-5) | Integration Ease (1-5) | TCO ($/month) | Compliance (SOC 2 / GDPR) | RAG Support | Overall Score |

|---|---|---|---|---|---|---|

| Tool A | 5 | 4 | $1,200 | Yes / Yes | Yes | 4.5 |

| Tool B | 4 | 5 | $800 | Yes / Yes | No | 4.2 |

| Tool C | 3 | 3 | $500 | No / Yes | Yes | 3.0 |

Assign more weight to the factors that align closely with your business goals. For instance, if compliance is a top priority, immediately rule out tools that don't meet SOC 2 Type II and GDPR standards. Similarly, if speed and seamless integration are critical, focus on tools that work effortlessly with your CMS, CRM, or email platform. Manual processes, like copy-pasting, can negate the efficiency gains AI tools promise. Use this scoring system to make informed decisions and finalize the tools you want to test further.

Step 5: Test Your Top Choices

Once you've narrowed down your options, it's time to roll up your sleeves and test the tools in action. No amount of research can replace the insights gained from seeing how a tool performs in your specific environment. The goal is to evaluate its real-world performance - not just the polished experience shown in vendor demos. Plan a structured trial period, typically lasting between 30 and 100 days, to allow your team enough time to get comfortable with the tool and assess its capabilities thoroughly.

Start small with a pilot project instead of diving into a full implementation. Test the AI tool on a limited segment of your operations - like one campaign or department. This focused approach helps you identify potential issues early on without disrupting your entire workflow. Before starting, set clear, measurable goals that align with your business challenges, and make sure your data is standardized to avoid skewed results. Companies that prioritize onboarding during trials have reported up to a 15% boost in conversion rates. Pay attention to key metrics like rewrite time (how long it takes to refine the AI's output) and consistency (whether the tool delivers the same quality across multiple tries with the same prompts). If the tool fails more than 20% of your evaluation tasks, the costs of fixing errors and ensuring quality may outweigh its benefits. This trial phase also provides a chance to fine-tune your evaluation criteria.

Run Trial Periods and Beta Tests

Your trial should address three main challenges: cost, time, and resistance. Start by auditing your data, then dedicate the first two days to setting up the tool and creating prompts. Use the next few days to test core tasks, and spend the remainder of the trial identifying friction points and assessing readiness for a larger rollout.

"Trial is the start of everything. Without it, you don't have a business." – Roger Martin, Professor and Strategy Advisor

Evaluate the tool by testing it on 10–20 real-world tasks instead of relying on a single, idealized demo scenario. Use the same prompts and assets for each tool to ensure an objective comparison. Observe how your team interacts with the tool in real time. This will help you understand which features are genuinely useful and which may be overlooked. For example, companies that integrate predictive analytics into their sales processes and properly test these tools have seen up to a 50% increase in lead conversions. These insights will be invaluable as you move closer to making a final decision.

Collect Team Feedback

Once the testing phase wraps up, gather feedback from everyone who used the tool. This includes daily users across departments, from IT to procurement, to get a well-rounded view of its impact. Keep in mind that resistance to change is natural - many people prefer sticking to familiar workflows. Honest feedback about the learning curve, ease of use, and overall fit within existing workflows will help you determine if the tool truly reduces workload or creates new challenges.

Consider hosting workshops to address skill gaps and ensure your team can make the most of the tool. Track usability metrics like the number of clicks needed to complete a task, the frequency of errors or hallucinations, and how easily data can be exported without manual work. This diverse input will be critical for selecting the right tool and ensuring its successful adoption.

Step 6: Make Your Final Decision

Now that you've defined your criteria and analyzed insights from your pilot tests, it's time to make the final call. This step is about choosing a tool that provides measurable value and aligns with your business goals for the long term - not just going for the flashiest option. According to a recent MIT study, 95% of AI investments fail to deliver measurable returns due to poor value assessment.

Review Pilot Results and ROI

Start by comparing your pilot results to 8–12 weeks of pre-AI baseline data. Look at metrics like time, cost, volume, error rates, and revenue. Evaluate the benefits in terms of hours saved, labor cost reduction (hours saved × hourly rate), and revenue impact (conversion lift × traffic × average order value). For example, if your team saved 1,875 hours annually at $125 per hour, that translates to $234,375 in efficiency gains.

However, if your team spends over 20% of the "saved" time fixing AI-generated errors, the costs of corrections could outweigh the benefits. To ensure a well-rounded evaluation, use the "4 Cs" framework:

- Criticality: Consider the consequences of errors.

- Confidentiality: Assess data safety and privacy.

- Complexity: Evaluate how easily AI logic can be explained and understood.

- Comfort: Gauge user adoption and ease of use.

Additionally, calculate financial metrics like Net Present Value (NPV) and the payback period to determine how quickly the tool will break even. A simple table can help organize your findings for a clearer decision-making process:

| ROI Metric | Calculation Method | Example Impact |

|---|---|---|

| Efficiency | Hours Saved × Hourly Labor Cost | $234,375/year (1,875 hours saved at $125/hr) |

| Revenue Growth | Lift % × Traffic × Avg. Order Value | Higher conversion rates in marketing funnels |

| Risk Reduction | Incident Reduction × Avg. Incident Cost | Avoided legal or compliance fees |

| Productivity | Reclaimed Hours → New Output | +25 proposals/month or -20% cycle time |

Once you've reviewed the numbers, the next step is to evaluate the vendor's reliability and plans for the future.

Evaluate Vendor Support and Future Plans

The vendor's support and vision for the future are just as important as the tool's current capabilities. Look for vendors that provide strong Service Level Agreements (SLAs) for uptime and issue resolution, as well as proactive AI model updates and ongoing training opportunities like workshops and educational resources.

It's also crucial to ensure the vendor supports modularity and allows for model swapping, so you're not locked into a single system that could become outdated. Verify that their development roadmap aligns with your business objectives and incorporates emerging technologies relevant to your industry. For example, while 89% of executives recognize the importance of AI governance for innovation, only 46% have implemented KPIs focused on strategic value. A good vendor should help bridge this gap.

Be diligent about contractual terms. Only 17% of AI vendors explicitly commit to following all applicable laws in their standard agreements, and 92% claim broad data usage rights - much higher than the 63% average for other software. Insist on transparency regarding model sourcing, review termination clauses to ensure continued access to your data, and negotiate ownership of input data, generated outputs, and any models trained on your proprietary data. Opt for shorter contract terms and lighter commitments to maintain flexibility as the AI landscape evolves. The right vendor will empower your growth, not restrict it.

Conclusion

Key Takeaways for Selecting AI Tools

Choosing the right AI tool in 2026 boils down to aligning it with your specific business needs. Start by clearly defining the problem you're trying to solve - pinpoint your pain points and intended use cases rather than chasing the latest trends. Use the "4 Cs" framework to evaluate tools: Criticality (how much is at stake if errors occur), Confidentiality (data security concerns), Complexity (how understandable the tool is), and Comfort (ease of use).

When matching tools to tasks, reserve higher-cost options for more complex needs. Test each tool with 10–20 real-world tasks to determine which requires the least number of revisions. Tracking "rewrite time" is an effective way to measure return on investment (ROI). This thorough testing process helps ensure the tool you choose is both effective and efficient.

Before fully scaling, revisit governance and total cost of ownership (TCO). Consider indirect costs like training your team, preparing data, and integrating the tool into your existing systems. Keep in mind that 75% of organizations risk failure when they can't scale AI effectively. To avoid this, conduct targeted pilot programs to confirm the tool's fit for your operations.

For a streamlined selection process, platforms like AI Apps offer a curated directory of over 1,000 AI tools, categorized by functionality - such as Writing, Coding, and Support. With a multi-step verification process, AI Apps ensures you're comparing rigorously vetted tools. Use it to simplify your search, compare options, and make informed decisions based on standardized criteria. It’s a practical way to narrow down choices and find the right tool for your needs.

FAQs

What should I look for to ensure an AI tool can scale with my business?

When assessing an AI tool for scalability, it's crucial to examine how well it can handle growing workloads, adapt to increased demands, and maintain consistent performance. The tool should be capable of processing larger datasets and managing more requests as your requirements grow. Cloud-based solutions are often a smart choice since they can automatically adjust resources - like storage and computing power - based on demand.

Take a close look at the cost structure as your business scales. Opt for pricing models that are predictable, making it easier to plan your budget. Additionally, check for any limitations, such as maximum data size, supported formats, or integration capabilities, to ensure the tool can evolve alongside your business without requiring significant overhauls. Reliability is another key aspect - features like redundancy and fault tolerance are essential to keep operations running smoothly during peak times or unexpected disruptions.

By keeping these considerations in mind, you can select an AI tool that not only meets your current needs but also grows seamlessly with your business.

How can I make sure an AI tool works well with my current systems?

To make sure an AI tool works well with your current systems, start by outlining the software, databases, and workflows you already use. Pinpoint the key data formats and connection points that keep your operations running smoothly. Next, check if the AI tool provides native connectors, open APIs, or pre-built integrations that match your setup.

Before rolling it out completely, conduct a pilot test in a non-production environment. This helps confirm the tool’s compatibility and ensures it performs as expected. Don’t forget to verify that it meets your security and compliance needs, such as encryption standards and regulatory guidelines. Lastly, document the integration steps, provide team training, and prepare troubleshooting guides to make the transition as seamless as possible.

What should I consider for compliance and data security when choosing an AI tool?

When choosing an AI tool, it's important to prioritize regulatory compliance, technical security, and governance practices to ensure your data is protected and aligns with legal standards.

Start by verifying that the tool meets U.S. regulations like CCPA, HIPAA, or industry-specific requirements such as FINRA for financial services. Look for clear privacy policies, certifications, and audit-ready logs that prove compliance. Features like data retention controls, consent management, and options to delete or export data are essential to reduce compliance risks.

Next, evaluate the tool's technical security. Key protections to look for include end-to-end encryption, multi-factor authentication, and role-based access control. Check if the vendor offers deployment options like private cloud or on-premise solutions to ensure your sensitive data remains within a trusted environment. Regular security updates are another must-have.

Lastly, governance is a vital component. Implement an AI-risk framework to monitor how the tool is used, track changes, and maintain detailed audit trails. Conduct regular assessments and establish clear procedures for managing incidents or updates to ensure compliance remains a continuous effort, not a one-time action.